WASHINGTON — Releasing an audio recording of a special counsel’s interview with President Joe Biden could spur deepfakes and disinformation that trick Americans, the Justice Department said, conceding the U.S. government could not stop the misuse of artificial intelligence ahead of this year’s election.

A senior Justice Department official raised the concerns in a court filing on Friday that sought to justify keeping the recording under wraps. The Biden administration is seeking to convince a judge to prevent the release of the recording of the president’s interview, which focused on his handling of classified documents.

The admission highlights the impact the AI-manipulated disinformation could have on voting and the limits of the federal government’s ability to combat it.

A conservative group that’s suing to force the release of the recording called the argument a “red herring.”

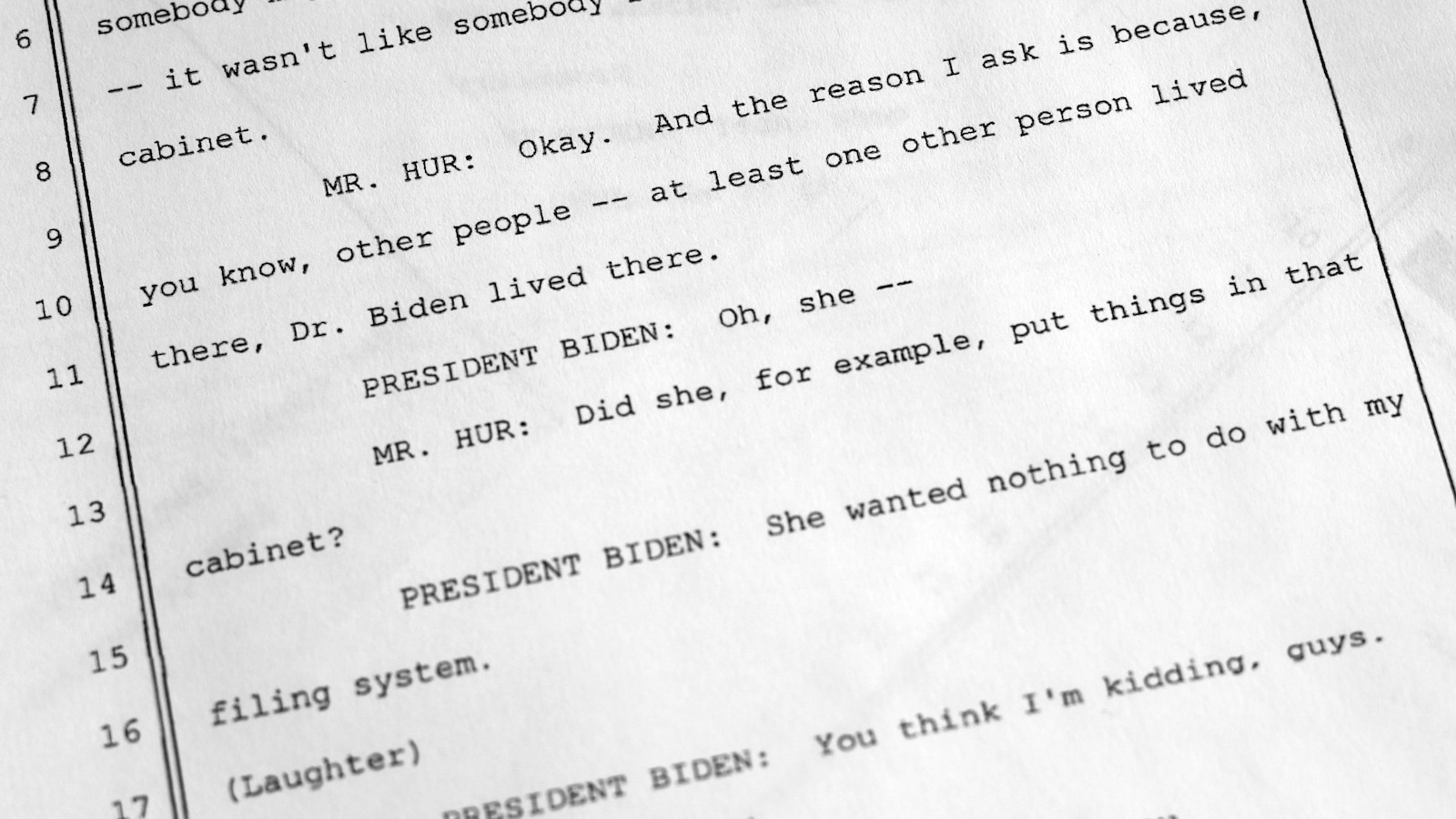

Mike Howell of the Heritage Foundation accused the Justice Department of trying to protect Biden from potential embarrassment. A transcript of the interview showed the president struggling to recall certain dates and confusing details but showing a deep recall of information at other times.

“They don’t want to release this audio at all,” said Howell, executive director of the group’s oversight project. “They are doing the kitchen sink approach and they are absolutely freaked out they don’t have any good legal argument to stand on.”

The Justice Department declined to comment Monday beyond its filing.

Biden asserted executive privilege last month to prevent the release of the recording of his two-day interview in October with special counsel Robert Hur. The Justice Department has argued witnesses might be less likely to cooperate if they know their interviews might become public. It has also said that Republican efforts to force the audio’s release could make it harder to protect sensitive law enforcement files.

Sen. Mark Warner, the Democratic chair of the Senate Intelligence Committee, told The Associated Press that he was concerned that the audio might be manipulated by bad actors using AI. Nevertheless, the senator said, it should be made public.

“You’ve got to release the audio,” Warner said, though it would need some “watermarking components, so that if it was altered” journalists and others “could cry foul.”

In a lengthy report, Hur concluded no criminal charges were warranted in his handling of classified documents. His report described the 81-year-old Democrat’s memory as “hazy,” “poor” and having “significant limitations.” It noted that Biden could not recall such milestones as when his son Beau died or when he served as vice president.

Biden’s aides have long been defensive about the president’s age, a trait that has drawn relentless attacks from Donald Trump, the presumptive GOP nominee, and other Republicans. Trump is 77.

The Justice Department’s concerns about deepfakes came in a court papers filed in response to legal action brought under the Freedom of Information Act by a coalition of media outlets and other groups, including the Heritage Foundation and the Citizens for Responsibility and Ethics in Washington.

An attorney for the media coalition, which includes The Associated Press, said Monday that the public has the right to hear the recording and weigh whether the special counsel “accurately described” Biden’s interview.

“The government stands the Freedom of Information Act on its head by telling the Court that the public can’t be trusted with that information,” the attorney, Chuck Tobin, wrote in an email.

Bradley Weinsheimer, an associate deputy attorney general for the Justice Department, acknowledged “malicious actors” could easily utilize unrelated audio recordings of Hur and Biden to create a fake version of the interview.

However, he argued, releasing the actual audio would make it harder for the public to distinguish deepfakes from the real one.

“If the audio recording is released, the public would know the audio recording is available and malicious actors could create an audio deepfake in which a fake voice of President Biden can be programed to say anything that the creator of the deepfake wishes,” Weinsheimer wrote.

Experts in identifying AI-manipulated content said the Justice Department had legitimate concerns in seeking to limit AI’s dangers, but its arguments could have far-reaching consequences.

“If we were to go with this strategy, then it is going to be hard to release any type of content out there, even if it is original,” said Alon Yamin, co-founder of Copyleaks, an AI-content detection service that primarily focuses on text and code.

Nikhel Sus, deputy chief counsel at Citizens for Responsibility and Ethics in Washington, said he has never seen the government raise concerns about AI in litigation over access to government records. He said he suspected such arguments could become more common.

“Knowing how the Department of Justice works, this brief has to get reviewed by several levels of attorneys,” Sus said. “The fact that they put this in a brief signifies that the Department stands behind it as a legal argument, so we can anticipate that we will see the same argument in future cases.”

The Justice Department recently expressed concerns about the use of ‘deepfake’ technology in an audio interview of President Joe Biden, highlighting the potential for misuse of artificial intelligence (AI) in creating deceptive content. Deepfake technology allows for the manipulation of audio and video to make it appear as though someone is saying or doing something they did not actually say or do.

In the case of the Biden interview audio, the concern is that the deepfake technology could be used to create false information or spread misinformation, potentially leading to confusion and distrust among the public. This is particularly troubling in the realm of politics, where misinformation can have serious consequences on public opinion and decision-making.

The Justice Department’s concerns underscore a growing awareness of the potential dangers of deepfake technology. While AI has the potential to revolutionize many aspects of society, including healthcare, transportation, and entertainment, it also has the capacity to be misused for malicious purposes.

One of the main worries about deepfake technology is its ability to create highly realistic and convincing fake content that can be difficult to detect. This poses a significant challenge for law enforcement and policymakers in determining how to regulate and combat the spread of deceptive deepfake content.

In response to these concerns, the Justice Department has called for increased vigilance and awareness of the potential risks associated with deepfake technology. They have also emphasized the importance of developing tools and strategies to detect and combat the spread of fake audio and video content.

In addition to government efforts, tech companies and researchers are also working on developing solutions to address the threat of deepfake technology. This includes the development of algorithms and tools that can detect and authenticate audio and video content to ensure its accuracy and authenticity.

Ultimately, the concerns raised by the Justice Department about deepfake technology in the Biden interview audio serve as a reminder of the need for continued vigilance and regulation in the use of AI. As technology continues to advance, it is essential that we remain aware of its potential risks and take proactive measures to prevent its misuse.